10 Tips for getting a good response from the ArcGIS Survey123 AI assistant

What does ArcGIS Survey123 have in common with ArcGIS Pro, ArcGIS Hub, and ArcGIS Business Analyst? AI Assistants! The Survey123 assistant chatbot in the web designer can help you build a survey with just a few prompts -- but getting it to do exactly what you want isn’t always so straightforward. I’m sharing tips on potential issues to look out for, and suggestions to help generate the best first draft possible.

After ArcGIS Pro and ArcGIS StoryMaps, ArcGIS Survey123 is one of the most widely used ArcGIS applications by schools in Canada. The user-friendly, form-based data collection app is intuitive enough to be used in K-12 classroom projects, but also lends itself well to higher education research surveys with a variety of question types, integrated data visualizations, and opportunities for advanced functionality through Survey123 Connect. And now, users can save time in the initial drafting stages by asking the new Survey123 assistant chatbot to add, remove, and revise questions and answers, as needed. Once they are satisfied with the write-up, they can generate a survey draft and open it in the web designer, where it can be tweaked further.

The benefits of the underlying generative AI technology go beyond purely saving time. It can help you with wording choice and tone, and basic question organization; prepare an initial translation of the survey in another language for you to review; and even suggest questions and answer options, in instances where you may need help fleshing out your survey. While it may not get you a perfect first draft right off the bat, it can still provide you with a good starting point and is worth trying out – especially while it’s credit-free, in beta!

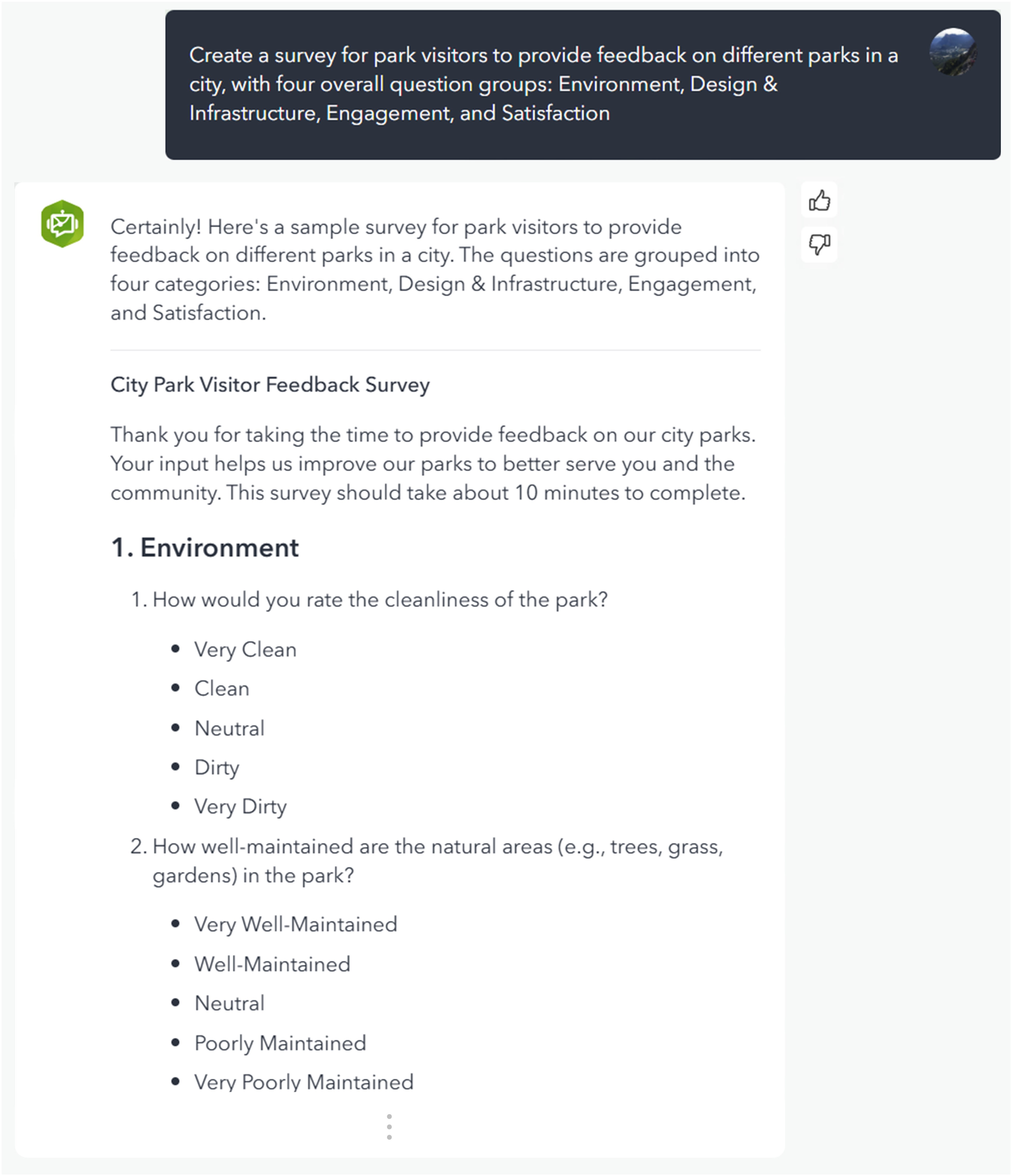

I used the AI assistant to create a parks visitor feedback survey whose responses I could feed into a parks assessment app, and made sure I left myself some time for exploratory detours along the way. Here are ten observations, tips, and tricks I’ve learned that can help in getting the assistant to design a survey the way you want:

1. The assistant can provide you with all the answers (and questions)!

You can ask for pretty much anything when it comes to survey questions: general questions you would like, the range and number of potential answers, wording revisions, etc. The prompts you give can be clear, direct instructions, or more open-ended requests with multiple potential approaches.

If you’re not sure how to start, you can request a survey on a topic, and the assistant will provide you with an initial set of questions and response options!

Examples of explicit revision requests.

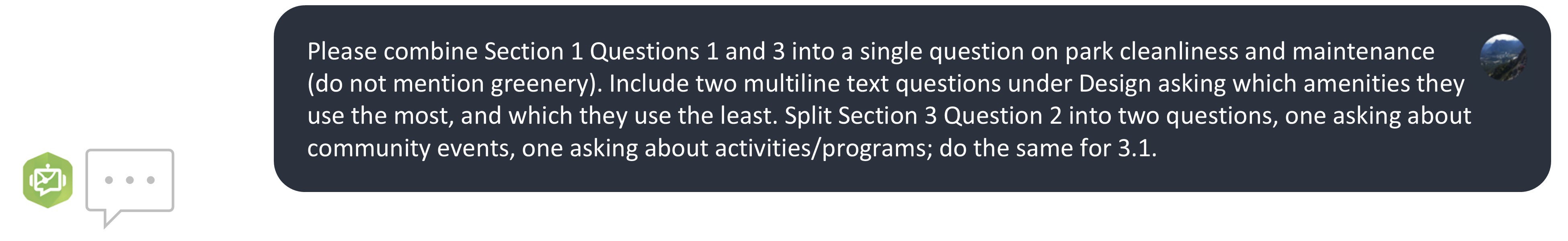

2. The assistant understands prompts surprisingly well, regardless of linguistic style or consistency, which can help save time over typing with proper grammar.

However, you should still try to use clear wording and complete sentences to avoid potential errors. And it’s always nice to say please!

The assistant understood each of these instructions, despite poor wording.

One prompt is much longer and more detailed than the other, but they deliver comparable results!

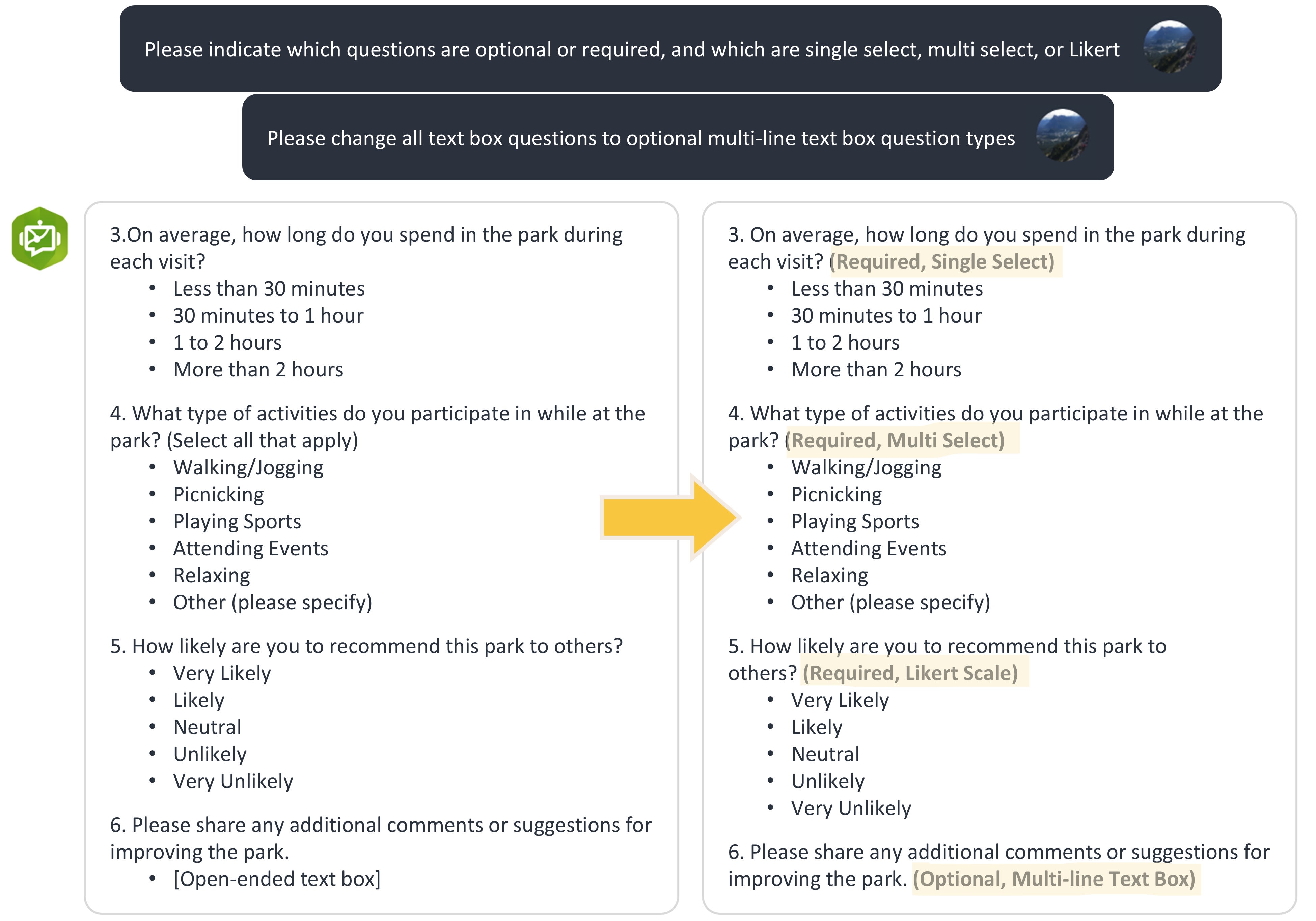

3. The assistant doesn’t always show the question type by default. You can ask it to indicate the question type so that you can make any necessary changes prior to generating a survey draft.

Are the questions going to be single-select? Multi-select? Likert? Required? By requesting indicators, you can easily see and change the question type, without first having to generate the survey.

My first prompt helped clarify the question types, while the second ensured that all text box type questions throughout the survey were optional, and multi- rather than single-line.

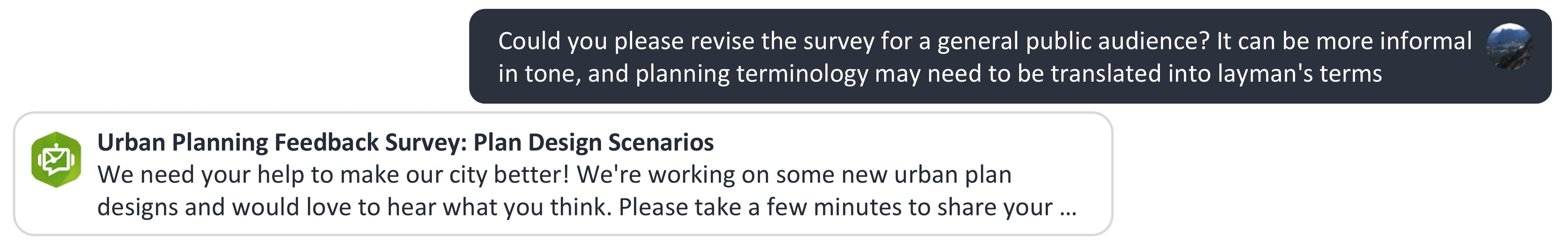

4. The assistant can modify the tone of the survey. Depending on your audience, you can ask it to apply a more technical slant to your survey, or put it all in layman’s terms.

In an exploratory detour, I first created an urban design scenario feedback survey targeted towards urban planners, and then asked the assistant to revise the survey for a general public audience, for comparison.

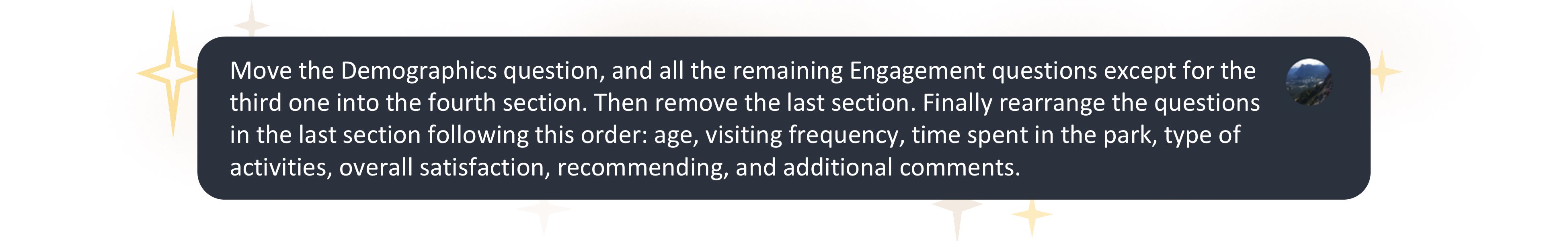

5. The assistant is great for basic organizing, but don’t expect it to help you out with appearance or more advanced layout formatting.

What it can do: adjust your survey header, description, and thank you message; and reorder questions or move them to specific groups (well, sort of – see below).

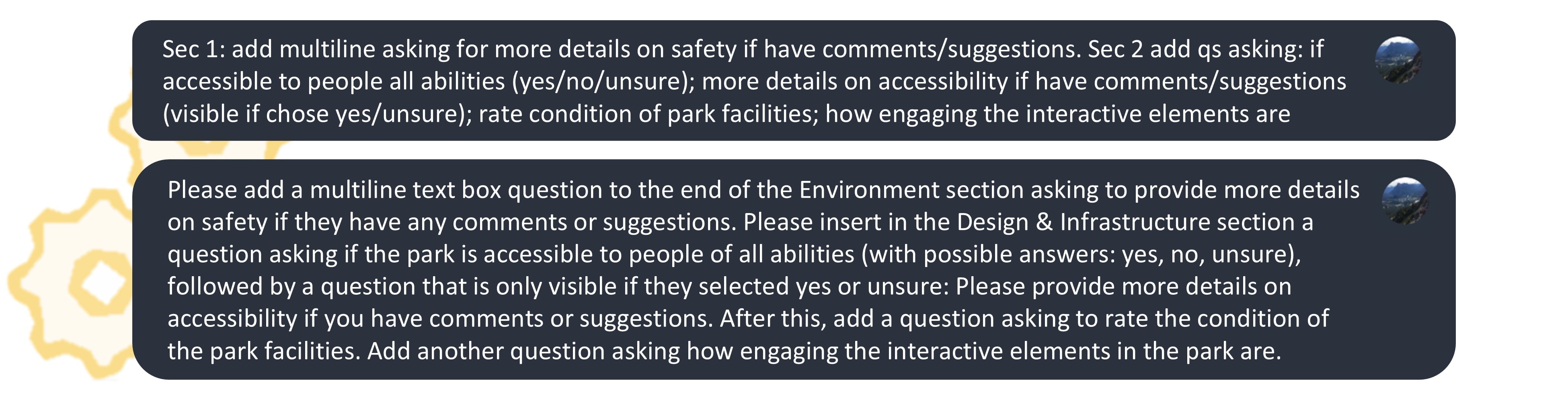

What it cannot do (despite what its responses might lead you to believe): set visibility rules, write hint text, or configure anything appearance related. It also cannot successfully recreate group structures in the web designer, though you may still find it helpful to create groups to get your questions organized.

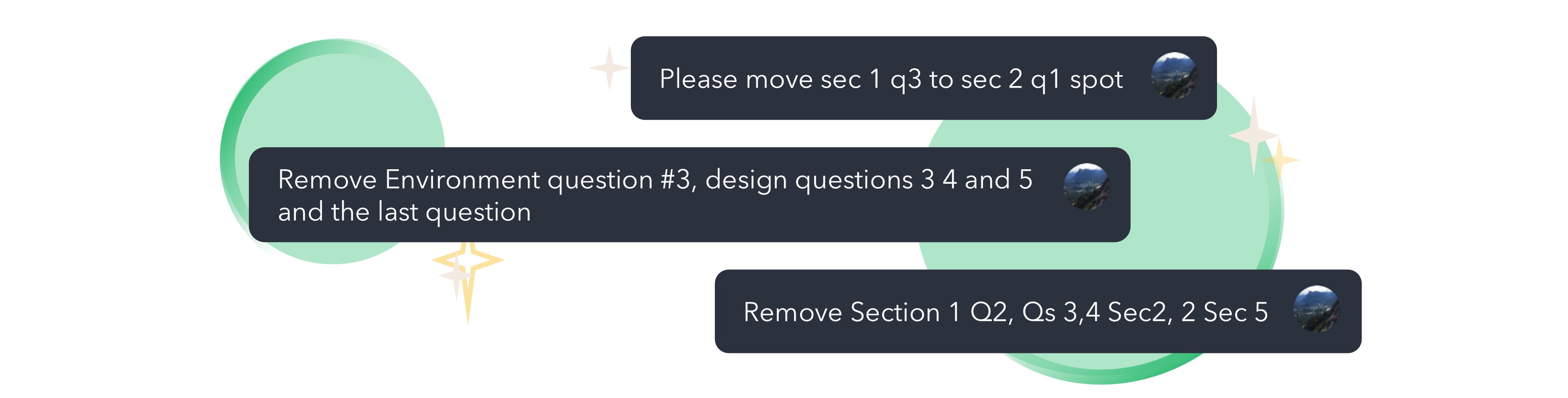

Asking the assistant to move questions to different sections and place them in a specific order, works.

Groups, appearance settings, and visibility rules from the AI assistant are not applied when the survey is converted to a draft in the web designer.

6. You can always jump back into the assistant after generating a survey draft in the web designer and continue to make changes...

Your prompt and revision history will persist, even after multiple days, exiting a session, signing out and back in, or reviving a survey you accidentally deleted, as long as you are using the same browser and don’t clear your cookies. If you do lose your assistant history but want to continue building on what you had, you can feed in questions from the last generated draft, if you have one. The assistant can’t read in questions from the designer, but you can copy questions and response options from the preview window and paste them in your prompt. Just remember, if you have any conditionally visible questions you want to copy, you will first need to select the correct responses for the questions that make them visible.

7. ... However, manual changes made in the web designer will not be reflected in the assistant’s version of the survey!

As the assistant can only access its own history, any manual changes you made in the designer will be lost if you reopen the survey in the assistant but don’t add the same changes made in the web designer before generating a new draft. This is particularly important to remember if you’ve made any appearance or layout changes, as noted in Point 5, as these cannot be dictated or copied to the assistant and would need to be redone in the web designer.

8. If you are trying to recreate an existing form (e.g. an official government field survey), it is probably better to copy and paste the questions than to ask the assistant to replicate the form.

The assistant isn’t able to read in the exact questions from an existing form name or URL. Interestingly, it does seem to understand the general topics in the form (even if there appear to be no hints in the URL), so you may get similar, but not identical questions.

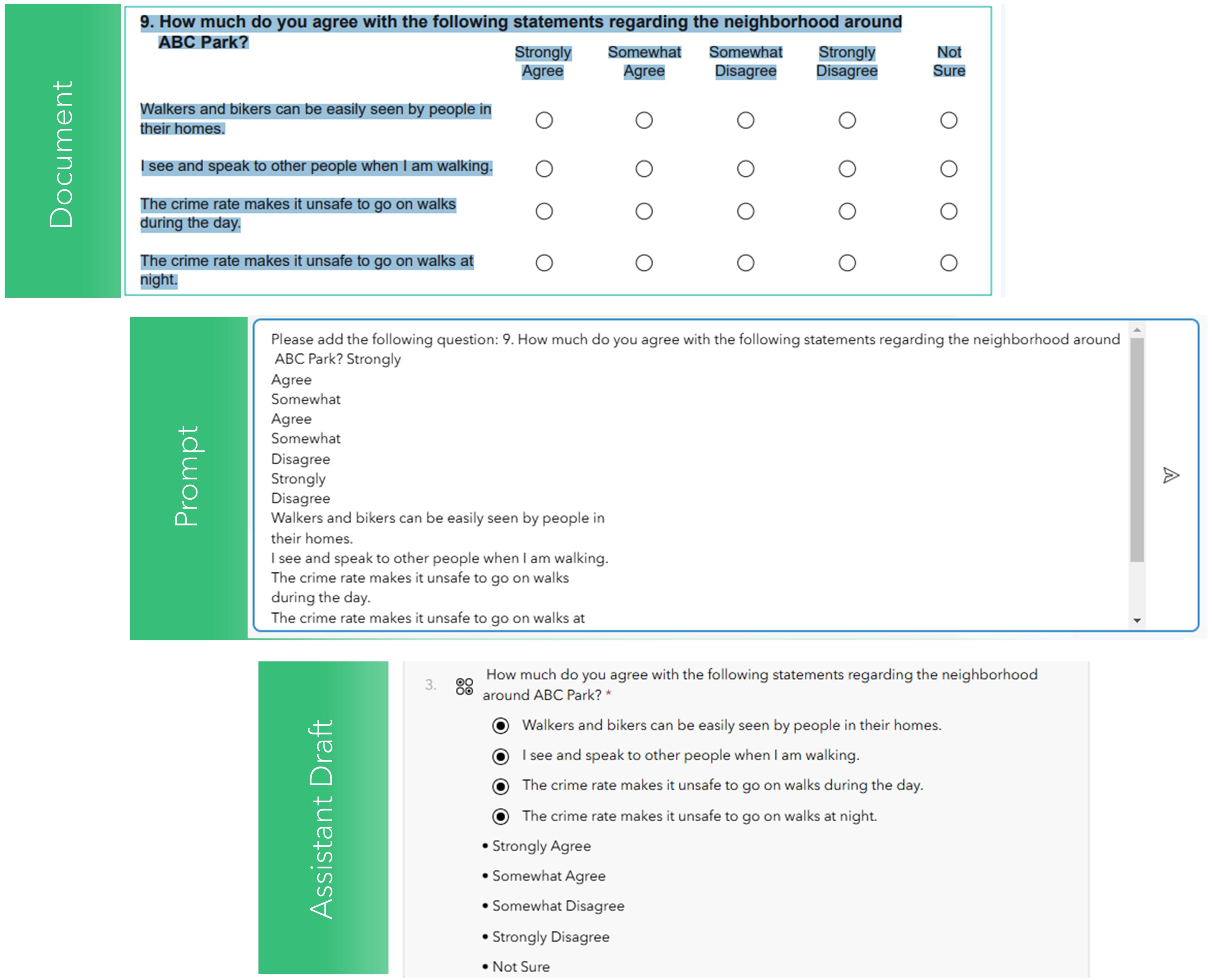

If you want the exact questions, copy- and pasting does a pretty good job, despite the initial messiness that ensues! In this example the assistant accurately recreated a single-select grid type question from the prompt text.

9. Do not assume it worked correctly (or did anything at all) just because the assistant replies, ‘Certainly!’ Always check that the assistant actually did what you asked it to.

As mentioned, appearance and layout elements will not carry over to the web designer, so the assistant can tell you that it has modified the form appearance, without having effectively changed anything.

There were other instances where things did not turn out as expected, such as when I asked it to revert back to a specific previous revision and it pulled up the wrong one, or when it interpreted a request more broadly than I intended.

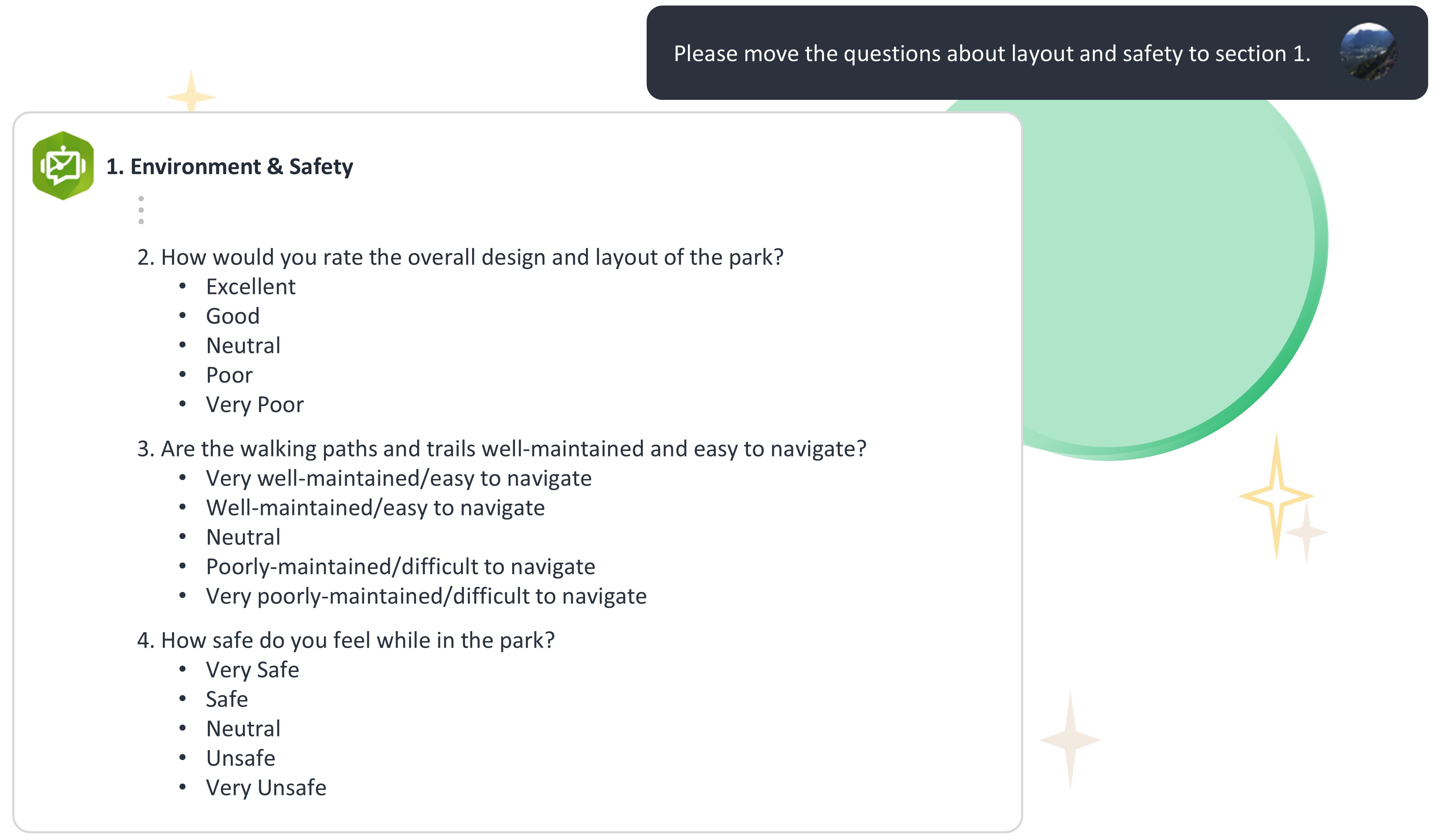

If you aren’t explicit, the assistant will infer meaning, and sometimes interpret words and phrases differently from how you intended. In this example, its interpretation of “layout and safety” included a question about trail maintenance and ease of navigation.

10. Similarly, always do a final check for unexpected AI contributions to make sure that it didn’t do something you didn’t ask it to.

Sometimes when I copied questions from an earlier draft to a new survey it would create the draft as I’d intended, and sometimes it provided feedback, making the executive decision to incorporate its own suggestions. If you don’t take time to review the output, you may be confused later when you suddenly find questions unexpectedly missing, added, or altered.

Is the AI assistant right for you?

Overall, I would say using the assistant is a good choice if you are designing a longer survey and aren’t sure what the final content will be. This includes using the assistant for help with brainstorming survey content, filling in gaps you may have missed, or general wording.

If you have a shorter survey and know exactly what you want to ask, then it will probably be faster to just configure the form manually. However, if you already have a digital copy of the survey questions and answers somewhere on your computer, or you are drawing on questions from existing digital surveys and documents, then it may actually be faster to create the form with the assistant by simply copy- and pasting your content in. Whenever you do use the assistant, it is a good idea to make the majority of your revisions in the assistant before generating a draft and making smaller changes and appearance-related modifications at the very end, manually in the web designer.

Note that the small snags and issues I mentioned here are things I noticed at the time of writing, and could very soon be irrelevant as the assistant is always improving! I only touched on the Survey123 assistant, but there are other cool AI capabilities you can try out like auto translation in the web app, or extracting information from images using Survey123 Connect and the field app.

If you don’t see the assistant chatbot in the web designer, contact your institution’s ArcGIS Online administrator to ask them to enable AI assistants.

For more information on AI in ArcGIS, see Advancing Trusted AI in ArcGIS and AI Transparency Cards.