GIS Projects: Triaging Considerations Series - Part 5

Continuing in this 6-part series on a 6-pillar framework to help navigate and prioritize GIS projects - Part 5 addresses understanding data. By the end of the series, you'll have a handy guide to assist you in triaging projects, making the process smoother and more manageable. If you stay tuned until the end, I’ll also include a cheat sheet to help.

As prefaced in parts 1, 2, 3, and 4 it's important to note that this framework is based on my experiences as a consultant and technical solutions specialist. It draws from personal project-level experience and relative risk assessments and may not fully align with your organization's stance on technology, training and capacity, business workflows or security and privacy.

The Six Pillars for GIS Project Triage Consideration

Framework for triaging contains 6 pillars

Just to review from my previous post, this framework considers six pillars for triaging GIS project requests:

- Return on Investment (ROI): Does the project have a tangible return on investment?

- Risk: What associated risks are anticipated with the project?

- People: What capacity is required for the project and its sustainment throughout its typical lifecycle?

- Technology: What pieces of technology or equipment are required to ensure the project's success?

- Data: Is the data involved in the project accurate, secure, accessible and timely?

- Application & Project: What are the project deliverables, outcomes and measures of success?

This blog post will focus on understanding data considerations for successful project implementation.

Understanding Data Requirements

Understanding Data

This topic is going to be one of my longer posts in this series. This is because the concept of data is foundational to how a project will be delivered and whether it will be delivered successfully or not.

Understanding CIA Triad (Security)

The CIA Security Triad

The Chief Information Officers (CIOs) and Chief Security Officers (CSOs) reading this will be intimately familiar with the CIA Triad. For those not steeped in the information security world, the acronym “CIA” isn’t just a spy agency that inspires novels and movies – it is a foundational tenet of cyber security that helps organizations find balance between Confidentiality, Integrity and Availability of data. Adopting a security and privacy framework that favours one side of the triad more than the other will affect all the other pillars I have covered in previous posts.

- Confidentiality ensures that sensitive geospatial data, such as the locations of critical infrastructure or private property details, is accessible only to authorized individuals. Protecting this data from unauthorized access helps prevent misuse, privacy breaches and potential security threats. Implementing strong encryption methods and access controls are essential practices to maintain confidentiality.

- Integrity refers to the accuracy and reliability of geospatial data. It is crucial that the data remains unaltered during storage, transmission and processing unless authorized changes are made. Ensuring data integrity involves using named user identities for auditing and version control systems to detect and prevent unauthorized modifications. Maintaining the integrity of geospatial data is vital for making accurate decisions, as any corruption or tampering can lead to incorrect analyses and potentially harmful outcomes.

- Availability ensures that geospatial data is accessible to authorized users whenever needed. This involves implementing robust ArcGIS Enterprise deployments and infrastructure to prevent downtime and ensure data can be retrieved quickly and efficiently. Regular backups, disaster recovery plans and redundant systems are critical to maintaining availability. For geospatial data, which is often used in real-time applications like navigation and emergency response, high availability is essential to support timely and effective decision-making.

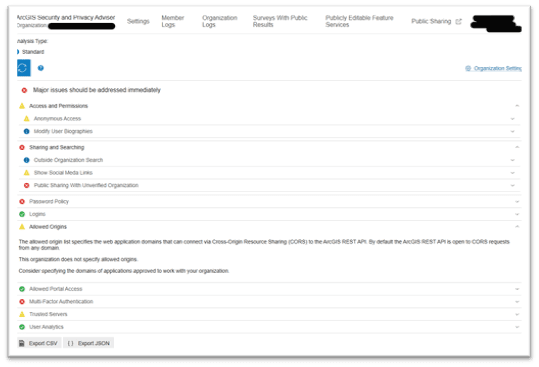

In fact, if you already have an ArcGIS Enterprise or ArcGIS Online deployment, you can launch our free ArcGIS Security and Privacy Adviser tool to audit your implementation against IT security best practices.

Example of Security Adviser Audit

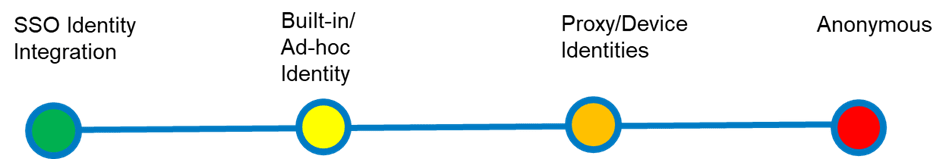

Understanding Confidentiality

Understanding Confidentiality

In the context of the CIA triad (Confidentiality, Integrity, Availability), geospatial data confidentiality can be framed by assessing the risk levels of different identity integrations. SSO identity integration, such as Entra using OAuth, SAML, IWA and LDAP, poses the lowest risk due to robust authentication mechanisms. Built-in application identities and ad-hoc identities, which are not based on federated identities, present a lower to medium risk. Proxy or headless accounts, often used on shared mobile devices, are high risk due to potential misuse and lack of accountability (as mentioned in this blog, these types of accounts are not supported). The highest risk arises from data that is not protected at all, where anonymous read/write access is granted, leading to significant confidentiality breaches.

If an organization prioritizes confidentiality above all else, it might implement overly restrictive access controls and encryption measures. While this protects sensitive data, it can also hinder accessibility and usability, making it difficult for authorized users to access the data when needed. This can slow down operations and decision-making processes, especially in time-sensitive situations.

Understanding Integrity – Security

Understanding Integrity - Security

In the context of ArcGIS security best practices, understanding how spatial data is secured at rest, in transit and at the endpoint is essential for maintaining data integrity. Data at rest in ArcGIS refers to data stored in databases, file systems or cloud storage, and securing it involves using encryption and access controls to prevent unauthorized modifications. Data in transit is data being transferred between servers, clients or services and requires encryption protocols like HTTPS and secure APIs to protect it from interception and tampering. Endpoint security involves protecting the devices accessing ArcGIS data, such as desktops, mobile devices and servers, through measures like antivirus software, secure configurations and regular updates to ensure data remains unaltered. Implementing these security measures helps maintain the accuracy and reliability of spatial data, preventing unauthorized changes that could compromise its integrity.

While maintaining accurate and reliable data is crucial, it shouldn't come at the cost of accessibility or confidentiality. For example, implementing extensive validation and verification processes to ensure data integrity might slow down data access and processing times, impacting the availability of data for critical operations. Additionally, if these processes are too stringent, they might inadvertently restrict access to authorized users, affecting the overall usability of the data.

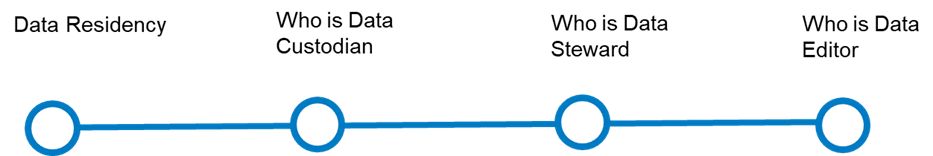

Understanding Integrity – Data Governance

Understanding Integrity – Data Governance

This topic deserves its own detailed discussion, but briefly touching on data governance—understanding it early in a project proposal is crucial for maintaining data integrity, security and compliance. For ArcGIS, data governance means knowing where the spatial data will reside and identifying key roles: the data custodian (responsible for the technical environment), the data steward (overseeing data quality and policies), the data owner (accountable for data use and access) and the data editor (managing data updates). This clarity ensures spatial data is accurately managed, securely stored and appropriately accessed, which is vital for effective spatial analysis and decision-making. Good governance also helps prevent data breaches, ensures regulatory compliance and facilitates efficient data management, leading to successful project outcomes. A RACI chart is typically ideal for visually representing who is responsible, accountable, consulted and informed regarding data.

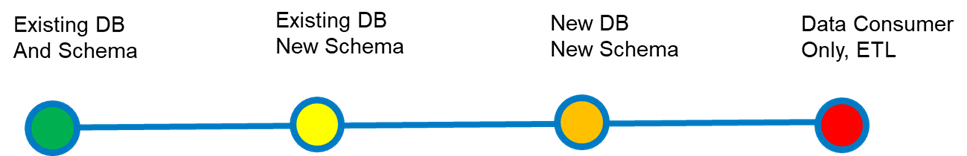

Understanding Availability – Data Model

Understanding Availability – Data Model

Understanding how a proposed project will utilize data models is crucial because it helps manage risks associated with different levels of changes. For instance, using existing geodatabases and layers without altering schemas is low risk, while introducing new schemas on existing geodatabases and layers presents a bit more risk. The risk increases further if the project requires entirely new geodatabases, layers and schemas. The highest risk occurs when a project involves configuring applications that consume data not in an ESRI-compatible format, necessitating significant extract, transform and load (ETL) processes. Which brings us to our next topic of availability – data types.

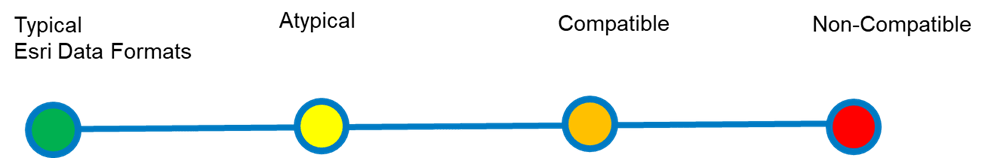

Understanding Availability – Data Types

Understanding Availability – Data Types

When evaluating project proposals, it's crucial to understand the data layers involved, including which are foundational and which are dynamic, as well as their formats. Ideally, these layers should be in common Esri data formats like those within a geodatabase, ArcGIS REST services API or supported raster types. Projects that require or ingest atypical data types, such as legacy file types like Shapefiles and Coverages, increase the risk. The risk is even higher with non-Esri data layers due to the lack of control over their API or SDK compatibility, except for OGC-compliant layers and services. Non-compatible or non-OGC compliant datasets pose the highest risk, as they demand significant resources for extract, transform and load (ETL) processes. This may require a real-time ETL server like GeoEvent, Koop, FME Server or another custom solution. Understanding these factors helps in planning, resource allocation and ensuring the project's success while maintaining data integrity and quality.

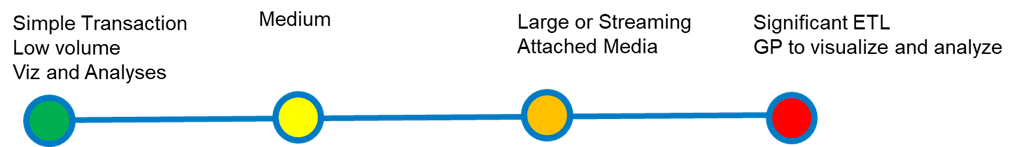

Understanding Availability – Data Volume

Understanding Availability – Data Volume

Data volume considerations include bandwidth requirements, data size, the number and type of transactions (input/output) and the type of data involved. Projects involving easily hosted and distributed data are lower risk compared to those with significant data sizes and attached media. For example, vector data with many vertices, such as sub-meter elevation isolines, will be slower to deliver than 10-meter elevation isolines. Higher resolution data takes longer to render on screen, but this can be mitigated using caching and tiling techniques. Layers with attached media will also load more slowly. Additionally, layers with editing and versioning enabled will have associated service overhead.

Understanding how users will interact with the data (view-only or editing) helps inform the best way to model and make the data available. Incorporating big data into the organization's data offerings has tangible business benefits. Enabling big data geoanalytics empowers data scientists to extract insights from large datasets like GPS, AIS, human movement and other moving sensor data. Ultimately, user requirements for data interaction will determine the underlying infrastructure deployment.

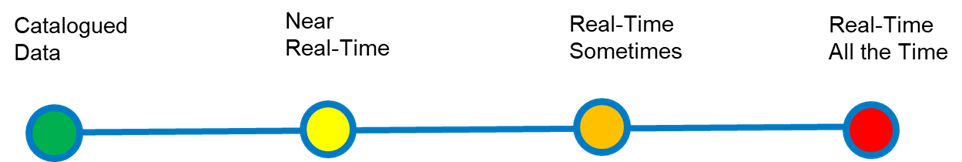

Understanding Availability – Timeliness

Understanding Availability – Data Timeliness

Understanding the time accuracy of data is crucial alongside data volume requirements. User requirements will dictate data delivery methods, but generally, streaming real-time data continuously carries higher risks compared to enabling users to discover and work with catalogued data. Catalogued (also known as system of record) data is considered authoritative as it undergoes rigorous QA/QC workflows, whereas real-time data may not benefit from such thorough validation. This risk can be mitigated through intermediate processes, such as using distributed collaboration to manage both catalogued and real-time data effectively.

At this point, I would like to share a couple of resources that have been very helpful for me when working with clients to design ArcGIS Enterprise deployments, set up enterprise geodatabases and optimize these solutions for better data availability.

- The ArcGIS Well-Architected Framework is a collection of concepts, patters and practices to help organizations design, build and operate ArcGIS systems

- The ArcGIS Geodatabases Resources is a collection of curated articles, help documentation and reference materials related to help organizations manage spatial data

- The Implementing ArcGIS Blog is an Esri community forum where expert Esri staff share their knowledge and wisdom on configuring and tuning ArcGIS Enterprise. The community also shares applets we can run to report, analyze, configure and tune our deployments to better serve our clients’ data needs

Stay tuned for my last blog post on this series where I will explore the project triage process at the project management level.

Read part 6 - now available